This is the second lesson in a series of lessons to teach you how to create a DirectX 12 powered application from scratch. In this lesson, vertex and index data is uploaded to the Graphics Processing Unit (GPU) for rendering. Basic vertex and pixel shaders are described and how to create a Pipeline State Object (PSO) that utilizes those shaders is also described. A root signature defines the parameters that are used by the stages of the rendering pipeline. In this lesson a simple root signature is created that defines a single constant buffer that contains the Model-View-Projection (MVP) matrix that is used to rotate a model in the scene.

Contents

- 1 Introduction

- 2 Heaps and Resources

- 3 Pipeline State Object

- 4 Root Signatures

- 5 DirectX 12 Demo

- 6 The Command Queue Class

- 7 The Game Class

- 8 Shaders

- 9 The Tutorial Class

- 9.1 The Tutorial Header

- 9.2 Tutorial2 Preamble

- 9.3 Tutorial2::Tutorial2

- 9.4 Tutorial2::UpdateBufferResource

- 9.5 Tutorial2::LoadContent

- 9.6 Tutorial2::ResizeDepthBuffer

- 9.7 Tutorial2::OnResize

- 9.8 Tutorial2::OnUpdate

- 9.9 Tutorial2::TransitionResource

- 9.10 Tutorial2::ClearRTV

- 9.11 Tutorial2::ClearDepth

- 9.12 Tutorial2::OnRender

- 9.13 Tutorial2::OnKeyPressed

- 10 Run the Demo

- 11 Conclusion

- 12 Download the Source

- 13 References

Introduction

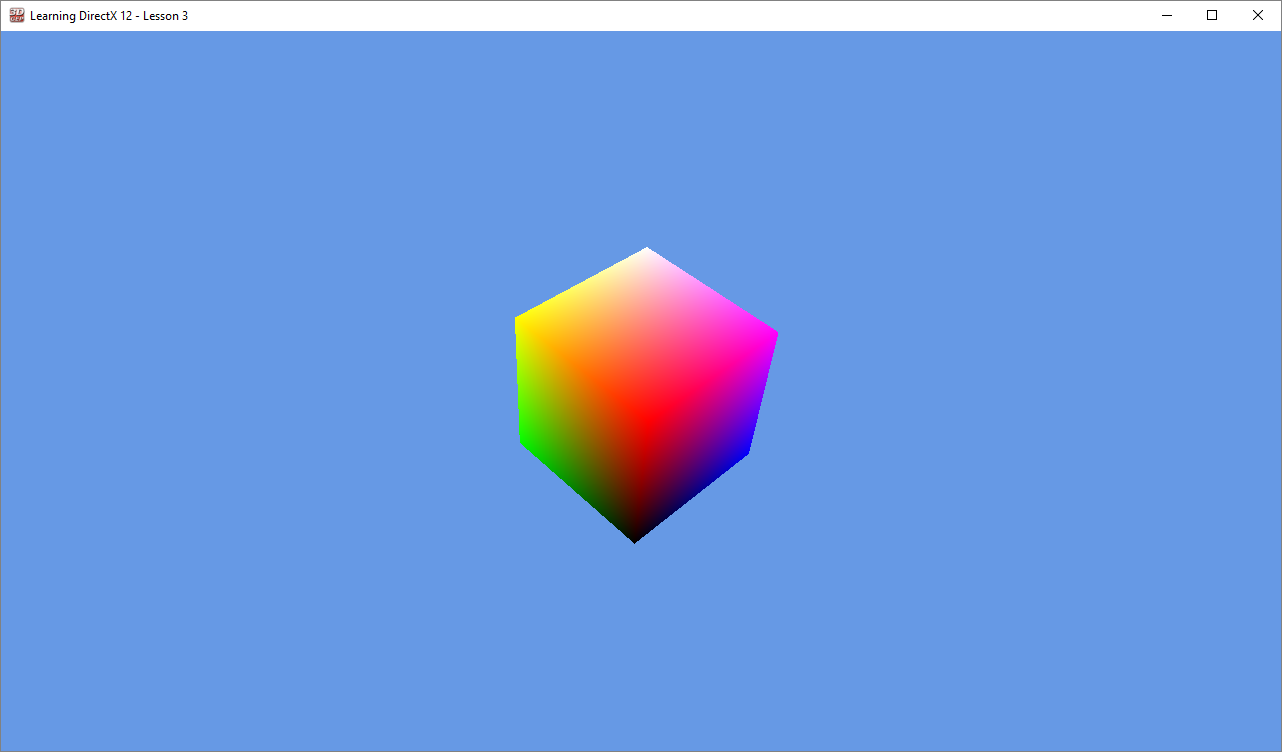

In the previous lesson you learned how to initialize a DirectX 12 application. You also learned how to synchronize the CPU and GPU operations in order to correctly implement N-buffered rendering. At the end of the previous lesson, you had a DirectX 12 application that performed a screen clear but nothing was rendered to the screen. In this lesson, you learn how to render a simple cube primitive. Texturing and lighting is the subject of a later lesson. This lesson assumes you have read and understand the concepts presented in the previous lesson. If not, please take the time to read the first lesson before reading this one.

It is important to understand a few new concepts when working with DirectX 12. One such concept is resource usage in DirectX 12. In previous versions of the DirectX API, there were different interfaces depending on the resource type. For example, a buffer and a texture (1D, 2D, and 3D) each had an interface to describe the resource type. In DirectX 12 the only interface used to describe a resource is the ID3D12Resource interface.

DirectX 12 also provides more control over the way the resource is stored in GPU memory. The ID3D12Heap interface allows for various memory mapping techniques to be implemented which may be used to optimize GPU memory utilization.

In order to provide better state management in DirectX 12, the pipeline state object (PSO) is used to describe the various stages of the rendering (and compute) pipeline. The PSO combines parts of the input assembly (IA), vertex shader (VS), hull shader (HS), domain shader (DS), geometry shader (GS), stream output (SO), rasterizer stage (RS), pixel shader (PS), and output merger (OM) stages of the rendering pipeline. A few properties of the rendering pipeline (such as viewport and scissor rectangle) require additional API calls but most of the configuration of the rendering pipeline is controlled by the PSO.

Another new concept in DirectX 12 is the root signature. A root signature describes the parameters that are passed to the various programmable shader stages of the rendering pipeline. Examples of parameters that are passed to the programmable shader stages are constant buffers, (read-write) textures, and (read-write) buffers. Texture samplers (an object that determines how the texels in a texture are sampled and filtered) are also defined in the root signature. In this lesson, a very simple root signature is created that uses a constant buffer parameter to rotate a cube in the scene. More complex uses of the root signature will be described in a later lesson.

At the end of this lesson you should have a better understanding of the following concepts:

- Uploading Buffer Resources to the GPU

- Heaps

- Pipeline State Objects

- Root signatures

Heaps and Resources

In the first lesson a swap chain is created which internally contains one or more texture resources that are used to present the final rendered image to the screen. The creation of the texture resource for the swap chain is hidden in the IDXGIFactory2::CreateSwapChainForHwnd method. In this case, the graphics programmer has no control over how and where the resource is created. In this lesson, several buffer resources are required to render the scene. For these resources, the graphics programmer must decide how those buffer resources are created. There are several different ways that GPU resources are allocated within a memory heap (ID3D12Heap):

- Committed Resources

- Placed Resources

- Reserved Resources

In the next section, the various resource allocation methods are described.

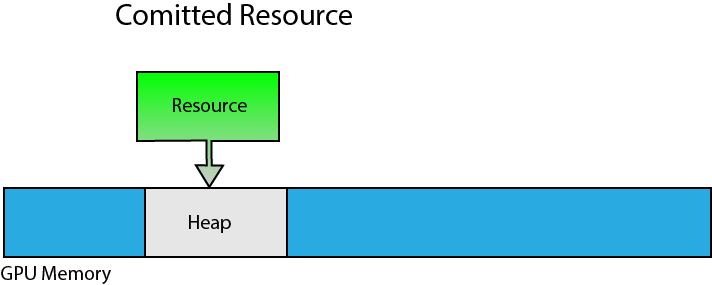

Committed Resources

A committed resource is created using the ID3D12Device::CreateCommittedResource method. This method creates both the resource and an implicit heap that is large enough to hold the resource. The resource is also mapped to the heap. Committed resources are easy to manage because the graphics programmer doesn’t need to be concerned with placing the resource within the heap.

Committed resources are ideal for allocating large resources like textures or statically sized resources (the size of the resource does not change). Committed resource are also commonly used to create large resource in an upload heap that can be used for uploading dynamic vertex or index buffers (useful for UI rendering or uploading constant buffer data that is changing for each draw call).

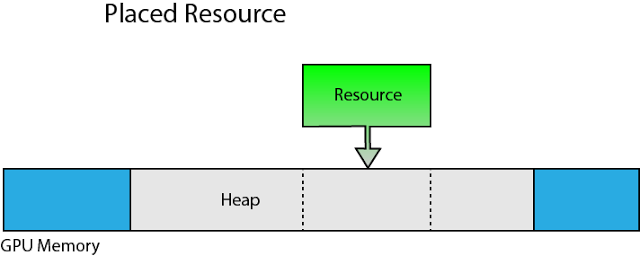

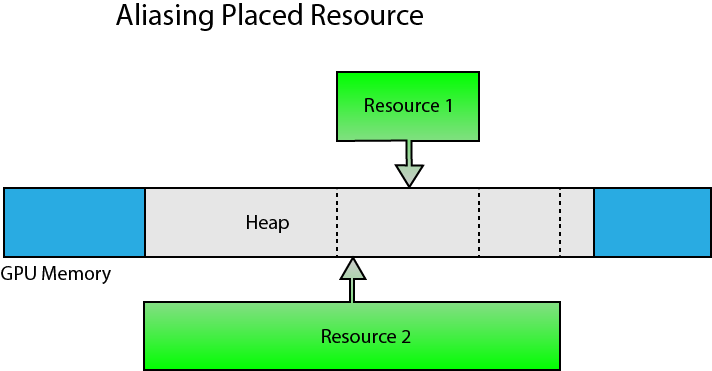

Placed Resources

A placed resource is explicitly placed in a heap at a specific offset within the heap. Before a placed resource can be created, first a heap is created using the ID3D12Device::CreateHeap method. The placed resource is then created inside the heap using the ID3D12Device::CreatePlacedResource method.

Although placed resource provide better performance because the heap does not need to be allocated from global GPU memory for each resource, using placed resources correctly does require some discipline from the graphics programmer. Placed resources provides more options for implementing various memory management techniques but with great power comes great responsibility. When using placed resources, there are some limitations that must be taken into consideration.

The size of the heap that will be used for placed resources must be known in advance. Creating larger than necessary heaps is not a good idea because the only way to reclaim the GPU memory used by the heap is to either evict the heap or completely destroy it. Since you can only evict an entire heap from GPU memory, any resources that are currently placed in the heap must not be referenced in a command list that is being executed on the GPU.

Depending on the GPU architecture, the type of resource that you can allocate within a particular heap may be limited. For example, buffer resources (vertex buffer, index buffer, constant buffer, structure buffer, etc..) can only be placed in a heap that was created with the ALLOW_ONLY_BUFFERS heap flag. Render target and depth/stencil resources can only be placed in a heap that was created with the ALLOW_ONLY_RT_DS_TEXTURES heap flag. Non render target textures can only be placed in a heap that was created with the ALLOW_ONLY_NON_RT_DS_TEXTURES heap flag. Adapters that support heap tier 2 and higher can create heaps using the ALLOW_ALL_BUFFERS_AND_TEXTURES heap flag to allow any resource type to be placed within that heap. Since the heap tier is dependent on the GPU architecture, most applications will probably be written assuming only heap tier 1 support.

Multiple placed resources can be aliased in a heap as long as they don’t access the same aliased heap space at the same time.

Aliasing can help to reduce oversubscribing GPU memory usage since the size of the heap can be limited to the size of the largest resource that will be placed in the heap at any moment in time. Aliasing can be used as long as the same space in the heap is not used by multiple aliasing resources at the same time. Aliased resources can be swapped using a resource aliasing barrier.

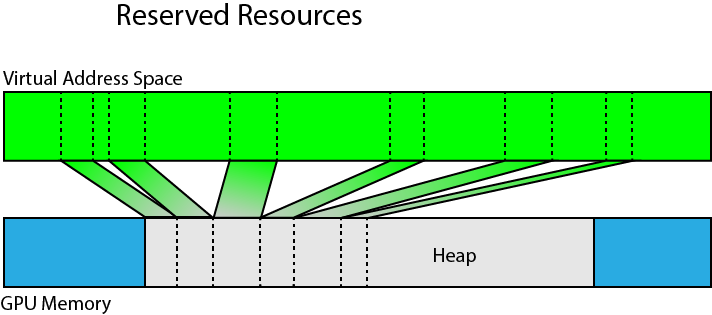

Reserved Resources

Reserved resources are created without specifying a heap to place the resource in. Reserved resources are created using the ID3D12Device::CreateReservedResource method. Before a reserved resource can be used, it must be mapped to a heap using the ID3D12CommandQueue::UpdateTileMappings method.

Reserved resources can be created that are larger than can fit in a single heap. Portions of the reserved resource can be mapped (and unmapped) using one or more heaps residing in physical GPU memory.

Using reserved resources, a large volume texture can be created using virtual memory but only the resident spaces of the volume texture needs to be mapped to physical memory. This resource type provides options for implementing rendering techniques that use sparse voxel octrees [2] without exceeding GPU memory budgets.

Pipeline State Object

The various stages of the rendering pipeline were briefly described in the first lesson. Please refer to that lesson if you are not familiar with the stages of the rendering pipeline.

The Pipeline State Object (PSO) contains most of the state that is required to configure the rendering (or compute) pipeline. The graphics pipeline state object includes the following information [3]:

- Shader bytecode (vertex, pixel, domain, hull, and geometry shaders)

- Vertex format input layout

- Primitive topology type (point, line, triangle, or patch)

- Blend state

- Rasterizer state

- Depth-stencil state

- Number of render targets and render target formats

- Depth-stencil format

- Multisample description

- Stream output buffer description

- Root signature

The pipeline state object structure contains a lot of information and if any of the state needs to change between draw calls (for example, a different pixel shader or blend state is needed) then a new pipeline state object needs to be created.

Although the pipeline state object contains a lot of information, there are a few additional parameters that must be set outside of the pipeline state object.

- Vertex and Index buffers

- Stream output buffer

- Render targets

- Descriptor heaps

- Shader parameters (constant buffers, read-write buffers, and read-write textures)

- Viewports

- Scissor rectangles

- Constant blend factor

- Stencil reference value

- Primitive topology and adjacency information

The pipeline state object can optionally be specified for a graphics command list when the command list is reset using the ID3D12GraphicsCommandList::Reset method but it can also be changed for the command list at any time using the ID3D12GraphicsCommandList::SetPipelineState method.

Root Signatures

A root signature is similar to a C++ function signature, that is, it defines the parameters that are passed to the shader pipeline. The values that are bound to the pipeline are called the root arguments. The arguments that are passed to the shader can change without changing the root signature parameters.

The root parameters in the root signature not only define the type of the parameters that are expected in the shader, they also define the shader registers and register spaces to bind the arguments to in the shader.

Shader Register & Register Spaces

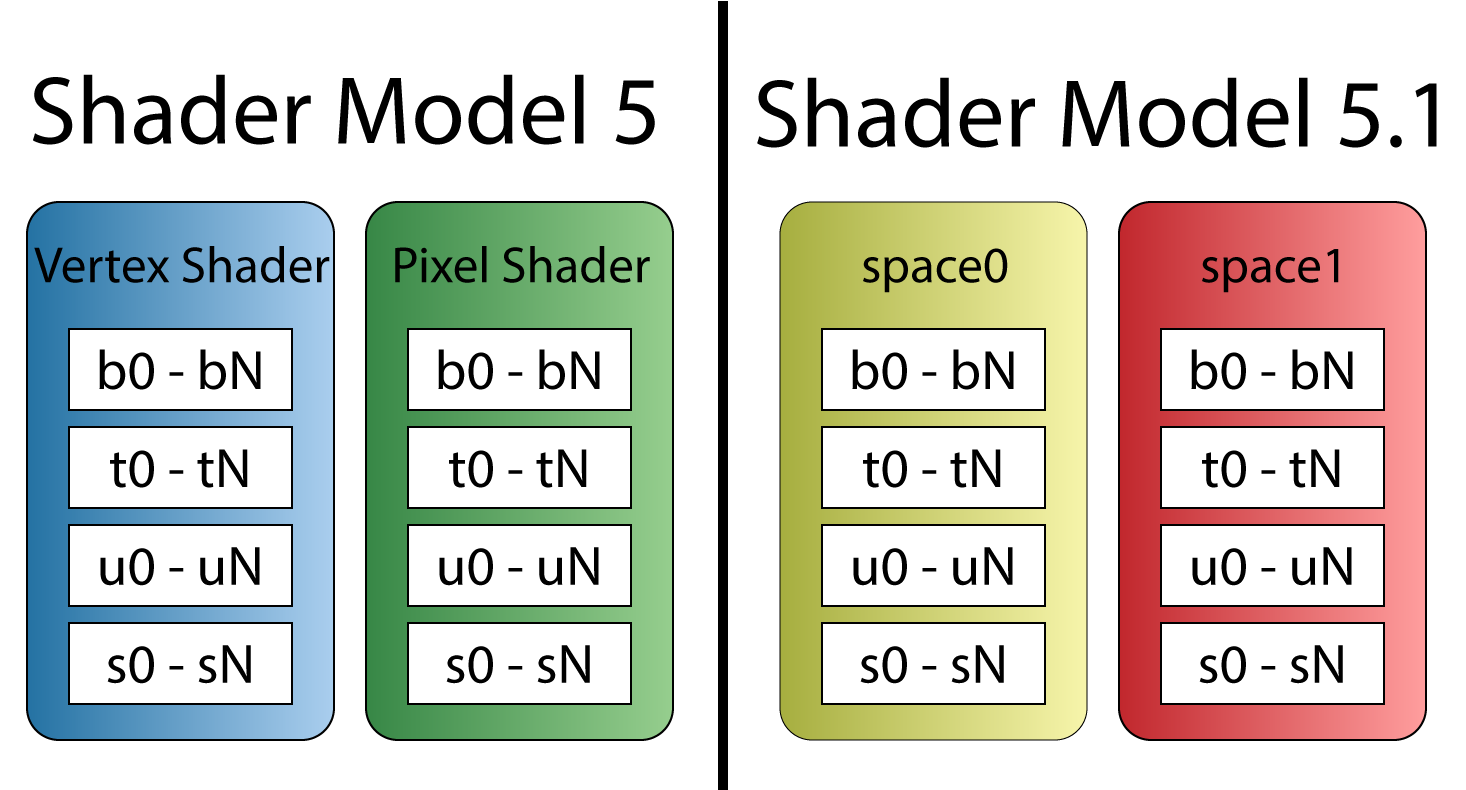

Shader parameters must be bound to a register. For example, constant buffers are bound to b registers (b0 – bN), shader resource views (textures and non-constant buffer types) are bound to t registers (t0 – tN), unordered access views (writeable textures and buffer types) are bound to u registers (u0 – uN), and texture samplers are bound to s registers (s0 – sN) where N is the maximum number of shader registers.

In previous versions of DirectX, different resources could be bound to the same register slot if they were used in different shader stages of the rendering pipeline. For example, a constant buffer could be bound to register b0 in the vertex shader and a different constant buffer could be bound to register b0 in the pixel shader without causing overlap.

DirectX 12 root signatures require that all shader parameters be defined for all stages of the rendering pipeline and registers used across different shader stages may not overlap. In order to provide a work-around for legacy shaders that may violate this rule, Shader Model 5.1 introduces register spaces. Resources can overlap register slots as long as they don’t also overlap register spaces.

Prior to Shader Model 5.1, resource registers could overlap across shader stages (left). Shader Model 5.1 introduces shader spaces which can be used to overlap register slots (right).

It is important for the graphics programmer to understand the shader register and register spaces overlapping rules when porting legacy shaders to DirectX 12.

Root Signature Parameters

A root signature can contain any number of parameters. Each parameter can be one of the following types:

D3D12_ROOT_PARAMETER_TYPE_32BIT_CONSTANTS: 32-bit Root ConstantsD3D12_ROOT_PARAMETER_TYPE_CBV: Inline CBV DescriptorD3D12_ROOT_PARAMETER_TYPE_SRV: Inline SRV DescriptorD3D12_ROOT_PARAMETER_TYPE_UAV: Inline UAV DescriptorD3D12_ROOT_PARAMETER_TYPE_DESCRIPTOR_TABLE: Descriptor Table

32-Bit Constants

Constant buffer data can be passed to the shader without the need to create a constant buffer resource by using the 32-bit constants. Dynamic indexing into the constant buffer data is not supported for constant data that is stored in the root signature space [4]. For example, the following constant buffer definition can be mapped to 32-bit constants stored in the root signature:

|

1 2 3 4 5 6 |

cbuffer TransformsCB : register(b0, space0) { matrix Model; matrix View; matrix Projection; } |

But, due to the array, the following constant buffer definition cannot be mapped to 32-bit constants in the root signature and instead requires either an inline descriptor or a descriptor heap to map the resource:

|

1 2 3 4 |

cbuffer TransformsCB : register(b0, space0) { matrix MVPMatrices[3]; } |

Each root constant in the root signature costs 1 DWORD (32-bits) [5].

Inline Descriptors

Descriptors can be placed directly in the root signature without requiring a descriptor heap [6]. Only constant buffers (CBV), and buffer resources (SRV, UAV) resources containing 32-bit (FLOAT, UINT, or SINT) components can be accessed using inline descriptors in the root signature. Inline UAV descriptors for buffer resources cannot contain counters (for example, if a RWStructuredBuffers contains a counter resource, it may not be accessed through an inline descriptor in the root signature. Texture resources cannot be referenced using inline descriptors in the root signature and must be placed in a descriptor heap and referenced through a descriptor table.

Unlike root constants, constant buffers containing arrays may be accessed using inline descriptors in the root signature. For example, the following constant buffer can be referenced using inline descriptors:

|

1 2 3 4 5 6 |

cbuffer SceneData : register(b0, space0) { uint foo; float bar[2]; int moo; }; |

Each inline descriptor in the root signature costs 2 DWORDs (64-bits) [5].

Descriptor Tables

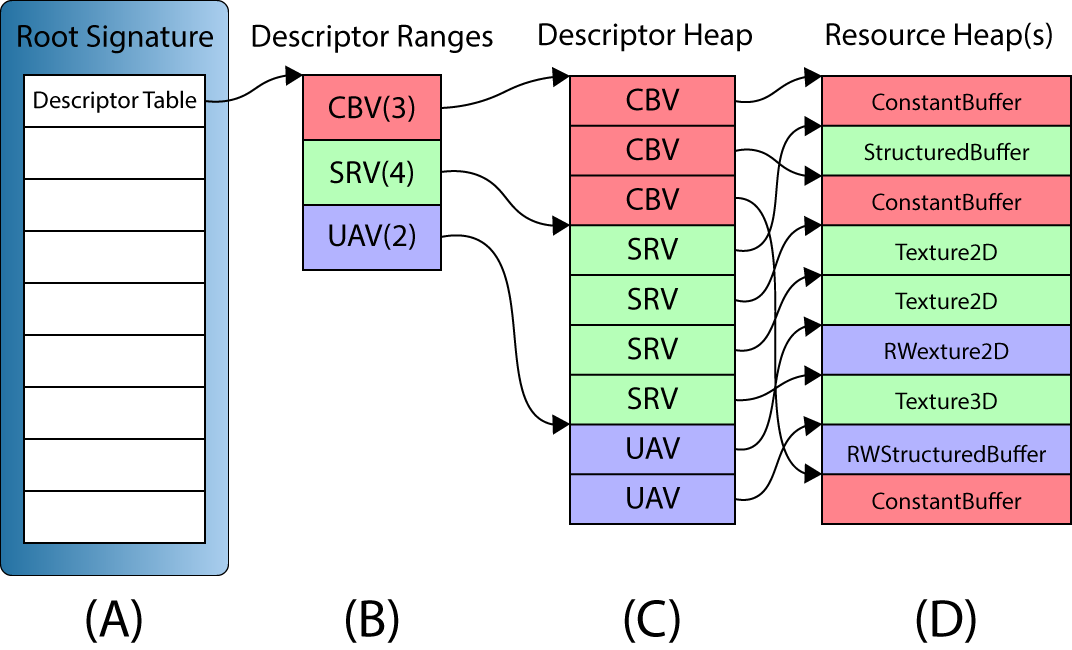

A descriptor table defines several descriptor ranges that are placed consecutively in a GPU visible descriptor heap.

A descriptor table (A) in a root signature defines a set of one or more descriptor ranges (B). The descriptor ranges define a set of consecutive descriptors in a GPU visible descriptor heap (C). The descriptors are views to the GPU resources stored in one or more resource heaps (D).

The above image illustrates a root signature with a single descriptor table parameter (A). The descriptor table contains three descriptor ranges (B): 3 Constant Buffer Views (CBV), 4 Shader Resource Views (SRV), and 2 Unordered Access Views (UAV). CBV’s, SRV’s and UAV’s can be referenced in the same descriptor table since all three types of descriptors can be stored in the same descriptor heap. The GPU visible descriptors (C) must appear consecutively in a GPU visible descriptor heap but the resources that they refer to (D) may appear anywhere in GPU memory, even in different resource heaps.

The above image shows a simplified version of the descriptor table ranges. The descriptor ranges in a descriptor table also need to specify the register slots and spaces that the arguments will be bound to in the shaders. An example of configuring a root signature will be shown later in the lesson.

Each descriptor table in the root signature costs 1 DWORD (32-bits) [5].

Static Samplers

A texture sampler describes how a texture should be sampled. It describes the filtering, addressing, and Level of Detail (LOD) to use while sampling from a texture. Using textures and texture samplers will be the topic of another lesson. In this lesson, it is important to understand that texture samplers can be defined directly in the root signature without the need of a descriptor heap. The D3D12_STATIC_SAMPLER_DESC structure is used to define a texture sampler directly in the root signature.

Static samplers do not use any space in the root signature and do not count against the size limit of the root signature.

Root Signature Constraints

Root signatures are limited to 64 DWORDs (2048-bits) [5]. Each root parameter has a cost that counts towards the root signature limit:

- 32-bit constants each costs 1 DWORD

- Inline descriptors each costs 2 DWORDs

- Descriptor tables each costs 1 DWORD

Static samplers defined in the root signature do not count towards the size of the root signature.

The cost of accessing a root argument in a root signature in terms of levels of indirection is zero for 32-bit constants, 1 for inline descriptors, and 2 for descriptor tables [5].

The graphics programmer should strive to achieve a root signature that is as small as possible but balance the flexibility of using a larger root signature. Parameters in the root signature should be ordered based on the likelihood that the arguments will change. If the root arguments are changing often then they should appear first in the root signature. If the root arguments are not changing often, then they should be the last parameters that appear in the root signature. Since 32-bit constants and inline descriptors have better performance in terms of level of indirection, they should be favored over using descriptor tables as long as the size of the root signature does not become to large.

An example of a simple root signature is provided later in this lesson.

DirectX 12 Demo

The previous lesson showed how to initialize a DirectX 12 application without using any C++ classes. Some of the source code from the first lesson was refactored in order to simplify the source code for this and future lessons. There are three primary classes that are used for this lesson:

ApplicationWindowCommandQueueGame

The Application class is responsible for initializing application specific data such as the DirectX 12 device and command queues. The Application class is also responsible for creating the Window instances and it is also the owner of the Window instances (Window instances can only be created and destroyed using the Application class). The Application class also exposes a Run method which is used to run the game and execute the message loop. The Quit method is used to quit the running application.

The Window class creates the swap chain which contains the final rendered image that will be presented to the screen. The Window class also contains functions to resize the window and toggle vsync, and fullscreen state.

The source code for the Application and the Window classes are not discussed in this lesson. You are encouraged to go back to the previous lesson if you are not familiar with the functionality of these classes. You may also refer to the source code for this lesson on GitHub. A link to the source code for this lesson also is provided at the end of the tutorial.

The CommandQueue and the Game class on the other hand may not be immediately clear and therefore will be discussed in greater detail in this lesson.

If you would prefer to skip the discussion on refactoring of the CommandQueue and Game classes then you can continue directly to the section about Shaders. Be aware that you may see code later in the lesson that you are not familiar with if you skip these sections.

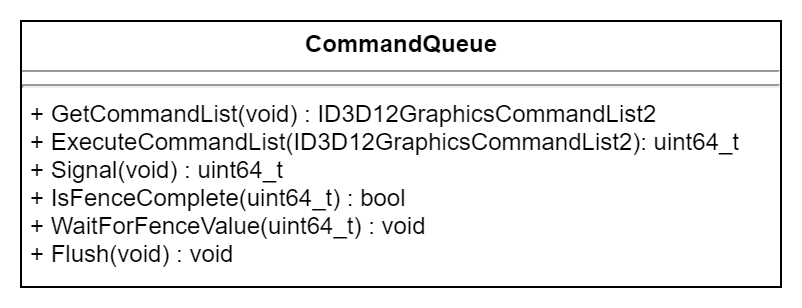

The Command Queue Class

The CommandQueue class encapsulates the ID3D12CommandQueue interface and the syncronization primitives that are used to perform GPU synchronization with the CPU. Most of the functionality of this class was discussed in the previous lesson so it should already be familiar but it is important to understand the specific choices that were made while creating the class before seeing it used in the demo.

CommandQueue Header

The CommandQueue class must provide the following functionality:

- Get a command list that can be used to record the draw commands

- Execute the command list on the command queue

- Signal a Fence in the command queue

- Check to see if a particular fence value has been reached

- Wait for a particular fence value to be reached

- Flush any pending commands that are executing on the command queue

The class diagram for the CommandQueue class might look like this:

CommandQueue Preamble

The CommandQueue has some dependencies on some system headers which are included first.

|

1 2 3 4 5 6 7 8 9 10 11 |

/** * Wrapper class for a ID3D12CommandQueue. */ #pragma once #include <d3d12.h> // For ID3D12CommandQueue, ID3D12Device2, and ID3D12Fence #include <wrl.h> // For Microsoft::WRL::ComPtr #include <cstdint> // For uint64_t #include <queue> // For std::queue |

The d3d12.h header file is required for the ID3D12CommandQueue, ID3D12Device2, and ID3D12Fence interfaces. The wrl.h header is required for the ComPtr class. The cstdint header is used for the uint64_t integer type and the queue header is used for the std::queue template class.

CommandQueue Class Definition

The public methods of the class are shown first.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

class CommandQueue { public: CommandQueue(Microsoft::WRL::ComPtr<ID3D12Device2> device, D3D12_COMMAND_LIST_TYPE type); virtual ~CommandQueue(); // Get an available command list from the command queue. Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> GetCommandList(); // Execute a command list. // Returns the fence value to wait for for this command list. uint64_t ExecuteCommandList(Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList); uint64_t Signal(); bool IsFenceComplete(uint64_t fenceValue); void WaitForFenceValue(uint64_t fenceValue); void Flush(); Microsoft::WRL::ComPtr<ID3D12CommandQueue> GetD3D12CommandQueue() const; |

The parameterized constructor for the class takes the DirectX 12 device and the type of the command queue to create as arguments. Creation of the DirectX 12 device and the different types of command queues was discussed in the previous lesson.

The GetCommandList method declared on line 20 returns a command list that can be used to issue drawing commands. The command list returned from this method will be in a state that it can immediately be used to issue commands. There is no need to reset the command list or to create a command allocator for the command list.

After the commands have been recorded into the command list, they can be executed on the command queue using the ExecuteCommandList method declared on line 24. The ExecuteCommandList method returns the fence value that can be used to check if the commands in the command list have finished executing on the command queue.

The functionality of the Signal, IsFenceComplete, WaitForFenceValue, and Flush methods declared on lines 26-29 have the same functionality as that described in the previous lesson and will not be discussed any further in this lesson.

The GetD3D12CommandQueue method defined on line 31 is used to get access to the underlying ID3D12CommandQueue interface.

The protected methods are described next.

|

1 2 3 4 |

protected: Microsoft::WRL::ComPtr<ID3D12CommandAllocator> CreateCommandAllocator(); Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> CreateCommandList(Microsoft::WRL::ComPtr<ID3D12CommandAllocator> allocator); |

The CreateCommandAllocator and CreateCommandList methods are used to create a command allocator and command list if no command list or command allocator are currently available.

Data that is private to the CommandQueue class is described next.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

private: // Keep track of command allocators that are "in-flight" struct CommandAllocatorEntry { uint64_t fenceValue; Microsoft::WRL::ComPtr<ID3D12CommandAllocator> commandAllocator; }; using CommandAllocatorQueue = std::queue<CommandAllocatorEntry>; using CommandListQueue = std::queue< Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> >; D3D12_COMMAND_LIST_TYPE m_CommandListType; Microsoft::WRL::ComPtr<ID3D12Device2> m_d3d12Device; Microsoft::WRL::ComPtr<ID3D12CommandQueue> m_d3d12CommandQueue; Microsoft::WRL::ComPtr<ID3D12Fence> m_d3d12Fence; HANDLE m_FenceEvent; uint64_t m_FenceValue; CommandAllocatorQueue m_CommandAllocatorQueue; CommandListQueue m_CommandListQueue; }; |

The CommandAllocatorEntry structure defined on lines 39-43 is used to associate a fence value with a command allocator. As discussed in the previous lesson, the command list can be reused immediately after it has been executed on the command queue, but the command allocator cannot be reused unless the commands stored in the command allocator have finished executing on the command queue. In order to guarantee that the command allocator is no longer “in-flight” on the command queue, a fence value is signaled on the command queue and that fence value (together with the associated command allocator) is stored for later reuse.

The CommandAllocatorQueue aliased on line 45 describes the std::queue object that is used to queue command allocators that are “in-flight” on the GPU queue. As soon as the fence value that is associated with the CommandAllocatorEntry has been reached, the command allocator can be reused.

Similar to the CommandAllocatorEntry, the CommandListQueue alias describes an std::queue object that is used to queue command lists that can be reused. There is no need to associate a fence value with the command lists since they can be reused right after they have been executed on the command queue.

Since there are several different types of command queues as described in the previous lesson, the command queue (command list) type is stored in the m_CommandListType member variable defined on line 48.

The m_d3d12Device member variable defined on line 49 stores a pointer to the ID3D12Device2 interface that is used to create the command queue, command lists, and command allocators used by this class.

The m_d3d12CommandQueue member variable declared on line 50 is used to store the pointer to the ID3D12CommandQueue interface that is owned by this class.

The m_d3d12Fence, m_FenceEvent, and m_FenceValue member variables are the synchronization primitives required to perform CPU-GPU synchronization. These primitives were discussed in the previous lesson and will not be discussed any further in this lesson.

The m_CommandAllocatorQueue and m_CommandListQueue member variables define the command allocator queue and command list queue that are used to queue up command allocators and command lists that can be reused.

CommandQueue Implementation

In this section, the implementations of the methods defined in the CommandQueue class are shown.

CommandQueue::CommandQueue

The CommandQueue constructor is used to create an instance of the CommandQueue class (obviously).

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

CommandQueue::CommandQueue(Microsoft::WRL::ComPtr<ID3D12Device2> device, D3D12_COMMAND_LIST_TYPE type) : m_FenceValue(0) , m_CommandListType(type) , m_d3d12Device(device) { D3D12_COMMAND_QUEUE_DESC desc = {}; desc.Type = type; desc.Priority = D3D12_COMMAND_QUEUE_PRIORITY_NORMAL; desc.Flags = D3D12_COMMAND_QUEUE_FLAG_NONE; desc.NodeMask = 0; ThrowIfFailed(m_d3d12Device->CreateCommandQueue(&desc, IID_PPV_ARGS(&m_d3d12CommandQueue))); ThrowIfFailed(m_d3d12Device->CreateFence(m_FenceValue, D3D12_FENCE_FLAG_NONE, IID_PPV_ARGS(&m_d3d12Fence))); m_FenceEvent = ::CreateEvent(NULL, FALSE, FALSE, NULL); assert(m_FenceEvent && "Failed to create fence event handle."); } |

On lines 10-14 the D3D12_COMMAND_QUEUE_DESC structure is described and on line 16 the ID3D12CommandQueue is created.

The fence object that is used to perform CPU-GPU synchronization is created on line 17 and the fence event is created on line 19.

CommandQueue::CreateCommandAllocator

The CreateCommandAllocator method is used to create a new instance of a ID3D12CommandAllocator.

|

1 2 3 4 5 6 7 |

Microsoft::WRL::ComPtr<ID3D12CommandAllocator> CommandQueue::CreateCommandAllocator() { Microsoft::WRL::ComPtr<ID3D12CommandAllocator> commandAllocator; ThrowIfFailed(m_d3d12Device->CreateCommandAllocator(m_CommandListType, IID_PPV_ARGS(&commandAllocator))); return commandAllocator; } |

The ID3D12Device::CreateCommandAllocator method is used to create an instance of the ID3D12CommandAllocator interface. This method was described in the previous lesson.

CommandQueue::CreateCommandList

Similar to the CreateCommandAllocator method, the CreateCommandList method is used to create an instance of a ID3D12GraphicsCommandList2. In this case, the command list requires a pointer to a valid ID3D12CommandAllocator.

|

1 2 3 4 5 6 7 |

Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> CommandQueue::CreateCommandList(Microsoft::WRL::ComPtr<ID3D12CommandAllocator> allocator) { Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList; ThrowIfFailed(m_d3d12Device->CreateCommandList(0, m_CommandListType, allocator.Get(), nullptr, IID_PPV_ARGS(&commandList))); return commandList; } |

The ID3D12GraphicsCommandList2 is created on line 64 using the ID3D12Device::CreateCommandList method. This method was described in the previous lesson.

CommandQueue::GetCommandList

One of the more interesting methods for the CommandQueue class is the GetCommandList method. This method returns a command list that can be directly used to issue GPU drawing (or dispatch) commands. The command list will be in the recording state so there is no need for the user to reset the command list before using it. A command allocator will already be associated with the command list but the CommandQueue class needs a way to keep track of which command allocator is associated with which command list. Since there is no way to directly query the for the command allocator that was used to reset the command list, a pointer to the command allocator is stored in the private data space of the command list.

|

1 2 3 4 |

Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> CommandQueue::GetCommandList() { Microsoft::WRL::ComPtr<ID3D12CommandAllocator> commandAllocator; Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList; |

Two temporary variables are defined on lines 71-72 to store the command allocator and command list.

Before the command list can be reset, an unused command allocator is required. The Command allocator can be reused as long as it is not currently “in-flight” on the command queue.

|

1 2 3 4 5 6 7 8 9 10 11 |

if ( !m_CommandAllocatorQueue.empty() && IsFenceComplete(m_CommandAllocatorQueue.front().fenceValue)) { commandAllocator = m_CommandAllocatorQueue.front().commandAllocator; m_CommandAllocatorQueue.pop(); ThrowIfFailed(commandAllocator->Reset()); } else { commandAllocator = CreateCommandAllocator(); } |

On line 74, the command allocator queue is checked to see if there are any valid items in the queue. If there is at least one item in the command allocator queue and the fence value associated with that command allocator has been reached, then it is popped off the front of the queue and reset. Otherwise, a new command allocator is created on line 83 using the CreateCommandAllocator method described earlier.

With a valid command allocator, the command list is created next.

|

1 2 3 4 5 6 7 8 9 10 11 |

if (!m_CommandListQueue.empty()) { commandList = m_CommandListQueue.front(); m_CommandListQueue.pop(); ThrowIfFailed(commandList->Reset(commandAllocator.Get(), nullptr)); } else { commandList = CreateCommandList(commandAllocator); } |

The command list queue is checked to see if there are any command lists in the queue. If there is at least one, it is popped from the front of the queue and reset using the command allocator that was retrieved in the previous step.

If there are no command lists available in the command list queue, then a new one is created on line 95 using the CreateCommandList method described earlier.

Before returning the command list to the calling function, the command allocator needs to be associated with the command list by assigning a pointer to the command allocator to the private data of the command list.

|

1 2 3 4 5 6 |

// Associate the command allocator with the command list so that it can be // retrieved when the command list is executed. ThrowIfFailed(commandList->SetPrivateDataInterface(__uuidof(ID3D12CommandAllocator), commandAllocator.Get())); return commandList; } |

The command allocator is associated with the command list on line 100 by assigning it to the private data of the command list using the ID3D12Object::SetPrivateDataInterface method. This is done so that the command allocator associated with the command list can be easily tracked outside of the CommandQueue class. When the command list is executed (which will be shown next), the command allocator can be retrieved from the command list and pushed to the back of the command allocator queue.

ID3D12Object object using the ID3D12Object::SetPrivateDataInterface method, the internal reference counter of the assigned COM object is incremented. The ref counter of the assigned COM object is only decremented if either the owning ID3D12Object object is destroyed or the instance of the COM object with the same interface is replaced with another COM object of the same interface or a NULL pointer.

I spent hours debugging what seemed like a COM pointer leak until I realized this.

CommandQueue::ExecuteCommandList

The ExecuteCommandList method is used to execute a command list that was previously retrieved using the GetCommandList method described previously.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

uint64_t CommandQueue::ExecuteCommandList(Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList) { commandList->Close(); ID3D12CommandAllocator* commandAllocator; UINT dataSize = sizeof(commandAllocator); ThrowIfFailed(commandList->GetPrivateData(__uuidof(ID3D12CommandAllocator), &dataSize, &commandAllocator)); ID3D12CommandList* const ppCommandLists[] = { commandList.Get() }; m_d3d12CommandQueue->ExecuteCommandLists(1, ppCommandLists); uint64_t fenceValue = Signal(); m_CommandAllocatorQueue.emplace(CommandAllocatorEntry{ fenceValue, commandAllocator }); m_CommandListQueue.push(commandList); |

Before the command list can be executed, it must be closed. The command list is closed on line 109 using the ID3D12GraphicsCommandList::Close method.

The command allocator that was previously associated to the command list is retrieved from the private data of the command list on line 113 using the ID3D12Object::GetPrivateData method.

ID3D12Object object will also increment the reference counter of that COM object.Since the ID3D12CommandQueue::ExecuteCommandLists method expects an array of ID3D12CommandList, a temporary array is created on line 115 and passed to the ID3D12CommandQueue::ExecuteCommandLists method on line 119.

Immediately after executing the command list, the queue is signaled using the Signal method. This produces the fence value to wait for to ensure that the command allocators can be reused.

On lines 112-123 the command allocator and the command lists are pushed to the back of their respective queues so that they can be reused the next time the GetCommandList method is called.

|

1 2 3 4 5 6 7 |

// The ownership of the command allocator has been transferred to the ComPtr // in the command allocator queue. It is safe to release the reference // in this temporary COM pointer here. commandAllocator->Release(); return fenceValue; } |

Before leaving this function, the temporary pointer to the command allocator needs to be decremented by releasing the COM pointer using the IUnknown::Release method.

On line 130, the fence value that can be used to perform CPU-GPU synchronization is returned to the calling function.

This concludes the description of the refactored CommandQueue class that is used by the demo code that follows. There is also an Application class and a Window class that will be used but not described in detail since it is (almost) a direct copy of the source code shown in the previous lesson.

In the next section, the Game class is described. The Game class provides a base class for the demo for this lesson and any future demos created in this series of lessons.

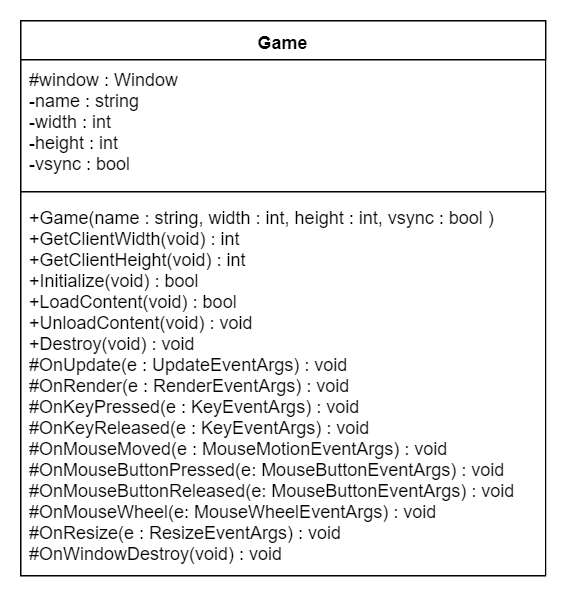

The Game Class

The Game class serves as the abstract base class for the demos. The Game class should perform the following functions:

- Initialize the DirectX 12 Runtime (and create a window to render to)

- Load the content that is specific to the demo

- Unload the content including freeing any memory used by the demo

- Destroy any resources used by the DirectX 12 runtime (destroy the render window)

- Respond to window messages (like keyboard and mouse events)

A UML diagram for the Game class might look like this:

The various functions of the Game class are described in the next section.

The Game Header

In this section, the interface for the Game class is described.

|

1 2 3 4 5 6 7 8 9 10 11 |

/** * @brief The Game class is the abstract base class for DirecX 12 demos. */ #pragma once #include <Events.h> #include <memory> // for std::enable_shared_from_this #include <string> // for std::wstring class Window; |

The Events.h header file included on line 6 contains structures that are used to relay window events back to the game class. An example of a window event that is fired on the Game class is the mouse and keyboard events that occur while the game’s window has focus.

The memory and string standard headers are also included on lines 8-9 to provide the std::enable_shared_from_this template and the std::wstring classes from the Standard Template Library (STL).

On line 11, the Window class is forward-declared in order to avoid including the header file for that class.

|

1 2 3 4 5 6 7 8 |

class Game : public std::enable_shared_from_this<Game> { public: /** * Create the DirectX demo using the specified window dimensions. */ Game(const std::wstring& name, int width, int height, bool vSync); virtual ~Game(); |

The constructor for the Game class takes the window title (name), and width and height of the render window to create. The vSync option specifies whether rendering should be synchronized to the vertical refresh rate of the screen or not.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

/** * Initialize the DirectX Runtime. */ virtual bool Initialize(); /** * Load content required for the demo. */ virtual bool LoadContent() = 0; /** * Unload demo specific content that was loaded in LoadContent. */ virtual void UnloadContent() = 0; /** * Destroy any resource that are used by the game. */ virtual void Destroy(); |

The Game class provides several methods that can be overridden by a child class. The Game class provides a default implementation for the Initialize method which just creates a Window to render the graphics to and registers the window callback functions. The default implementation of the Destroy method just destroys the window. The implementations of the LoadContent and UnloadContent must be provided by the child class (which is also the subject of this lesson).

The Game class also provides several callback functions for window events which can be overridden by a child class. The default implementations for the window callback functions is to do nothing.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 |

protected: friend class Window; /** * Update the game logic. */ virtual void OnUpdate(UpdateEventArgs& e); /** * Render stuff. */ virtual void OnRender(RenderEventArgs& e); /** * Invoked by the registered window when a key is pressed * while the window has focus. */ virtual void OnKeyPressed(KeyEventArgs& e); /** * Invoked when a key on the keyboard is released. */ virtual void OnKeyReleased(KeyEventArgs& e); /** * Invoked when the mouse is moved over the registered window. */ virtual void OnMouseMoved(MouseMotionEventArgs& e); /** * Invoked when a mouse button is pressed over the registered window. */ virtual void OnMouseButtonPressed(MouseButtonEventArgs& e); /** * Invoked when a mouse button is released over the registered window. */ virtual void OnMouseButtonReleased(MouseButtonEventArgs& e); /** * Invoked when the mouse wheel is scrolled while the registered window has focus. */ virtual void OnMouseWheel(MouseWheelEventArgs& e); /** * Invoked when the attached window is resized. */ virtual void OnResize(ResizeEventArgs& e); /** * Invoked when the registered window instance is destroyed. */ virtual void OnWindowDestroy(); |

The OnUpdate method declared on line 58 is invoked whenever the game logic should be updated. It is invoked when the WM_PAINT message is received by the Window.

The OnRender method is invoked whenever the screen should be redrawn. It is invoked immediately after the OnUpdate callback function.

The OnKeyPressed and OnKeyReleased callback functions are invoked whenever a key is pressed or released on the keyboard while the render window has focus.

The OnMouseMoved, On MouseButtonPressed, and OnMouseButtonReleased are invoked whenever the mouse moves, or any mouse button is pressed or released over the client area of the render window.

The OnMouseWheel callback function is invoked whenever the mouse wheel is scrolled while the mouse cursor is over the screen. In this case, the render windows does not need to have keyboard focus for this event to be dispatched to the window.

The OnResize method is invoked when the render window is resized. The implementation of the child class does not need to be concerned with resizing the swap chain buffers of the render window (this is handled automatically in the Window class) but does need to resize any resources that are allocated specifically by the derived class.

The OnWindowDestroy method is called when the render window is being destroyed. The default behaviour of this method is to call the UnloadContent method. A child class can override the OnWindowDestroy method if this is not intended behaviour.

The Game class also provides protected access to the window instance that is created by default in the Game::Initialize method.

|

1 2 3 4 5 6 7 8 9 |

std::shared_ptr<Window> m_pWindow; private: std::wstring m_Name; int m_Width; int m_Height; bool m_vSync; }; |

Most of the methods of the Game class do nothing. They simply provide a means to invoke the window callbacks from the window message process. The only exception to this is the Game::Initialize and Game::Destroy methods which create and destroy the render window instance.

Game::Initialize

The purpose of the Game::Initialize method is to initialize the game specific state. GPU resources should be allocated in the LoadContent method which should be provided by the child class.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

bool Game::Initialize() { // Check for DirectX Math library support. if (!DirectX::XMVerifyCPUSupport()) { MessageBoxA(NULL, "Failed to verify DirectX Math library support.", "Error", MB_OK | MB_ICONERROR); return false; } m_pWindow = Application::Get().CreateRenderWindow(m_Name, m_Width, m_Height, m_vSync); m_pWindow->RegisterCallbacks(shared_from_this()); m_pWindow->Show(); return true; } |

Before utilizing any functions of the DirectX Math library, support for the CPU instruction sets on the end-users computer must be verified [7]. On line 23 the XMVerifyCPUSupport method is used to verify CPU support on the users computer. If CPU support is not available on the users computer, the function will return false and the Application’s Run method will return an error code.

On lines 29-30, a Window is created by the Application class and event callbacks are registered for the Game class.

Before the function returns, the Window is shown on line 31.

Game::Destroy

The default implementation of the Destroy method is simply to tell the application to destroy the render window that was created in the Game::Initialize method.

|

1 2 3 4 5 |

void Game::Destroy() { Application::Get().DestroyWindow(m_pWindow); m_pWindow.reset(); } |

The remaining methods of the Game class are empty and will be overridden by the demo class for this lesson.

Before delving into the details of the demo class for this lesson, it is important to understand some basic concepts about shaders.

Shaders

In order to implement the demo for this lesson, two shaders are required to perform the rendering.

- Vertex Shader

- Pixel Shader

The Vertex Shader is responsible for transforming the vertices of the object being rendered from object-space to clip-space. The clip-space vertices are required by the (fixed-function) Rasterizer Stage of the rendering pipeline in order to clip the rendering primitives against the view frustum and to compute the per-pixel (or per-fragment) attributes across the face of the rendered primitives. The interpolated vertex attributes are passed to the Pixel Shader in order to compute the final color of the pixel.

The Pixel Shader is responsible for computing the final color of the pixel that is rendered to the screen (or an offscreen render target). It receives the interpolated vertex attributes that are computed by the Rasterizer Stage and usually outputs at least one color value that is written to a render target. In this lesson, a very simple pixel shader is shown. In later lessons, more complex lighting equations will be used to create a more realistic rendering of a scene.

Vertex Shader

In this lesson, a simple vertex shader is discussed. This lesson is not intended to be an exhaustive description of how to write vertex shaders but to provide a basic understanding of how to write a simple vertex shader for the purpose of this lesson.

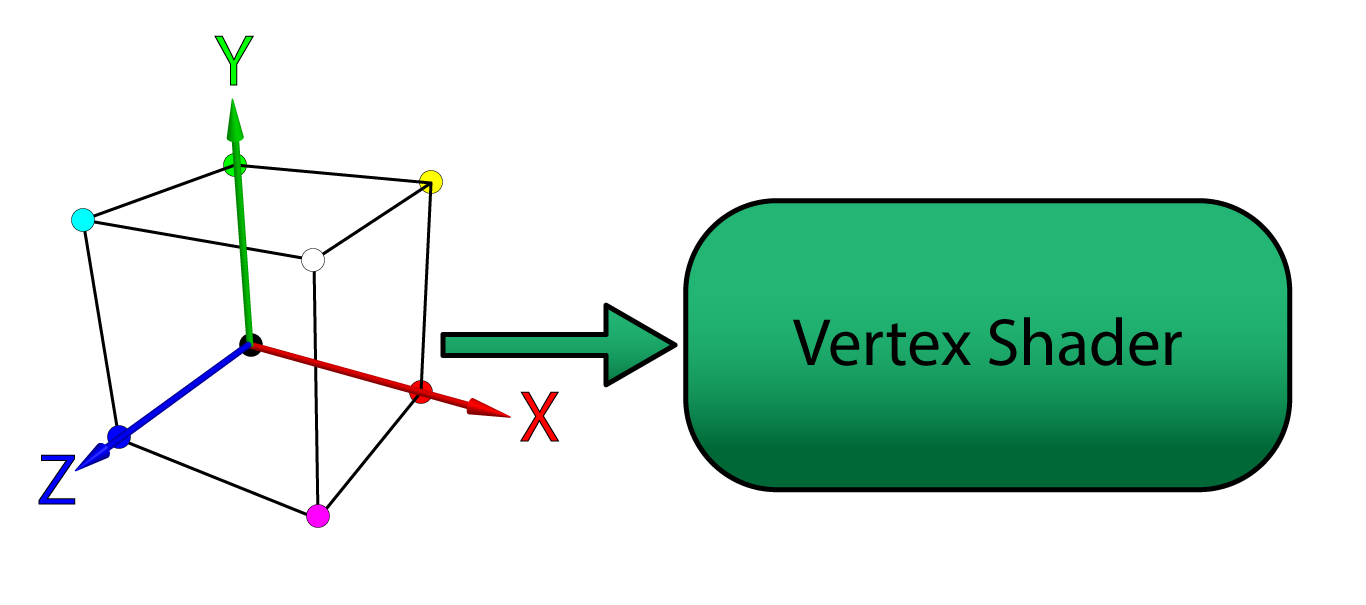

The basic concept of a vertex shader is that it receives the vertices that describe a model (expressed in object-space, or model-space) and performs zero or more transformations on the attributes of the vertices in order to produce the vertex attributes for the next stage of the rendering pipeline (this is usually the rasterizer stage but it could also be the geometry or tessellation stages).

The model vertices in object-space are sent from the application to the vertex shader stage of the rendering pipeline.

A vertex can contain any number of attributes. For example, the vertex position is a possible attribute of a vertex. Vertex color, normal, or texture coordinate are other examples of vertex attributes. For this lesson, only vertex position and color are used as vertex attributes. In later lessons, the vertex normal and texture coordinates are used.

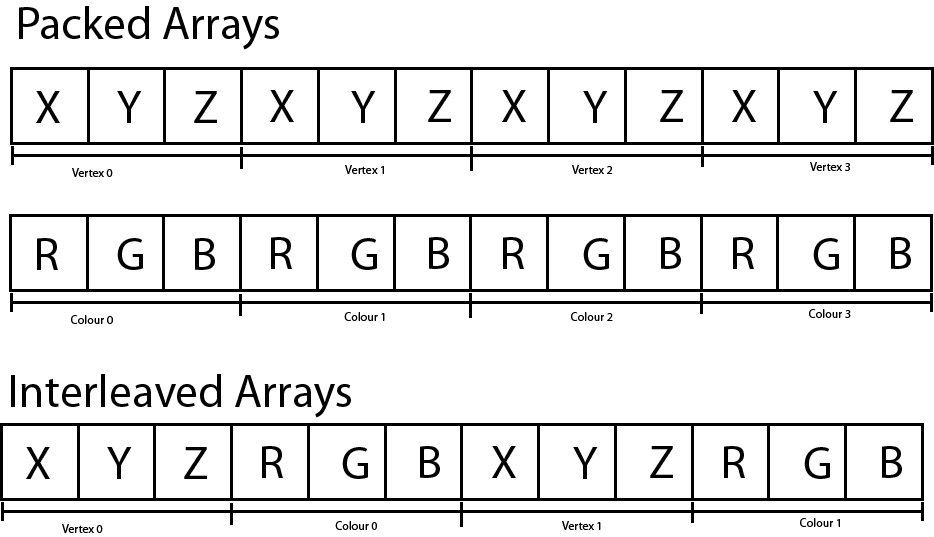

Vertex attributes can be sent to the GPU in either packed or interleaved format. Attributes that are stored in a packed format are usually stored in separate buffers (one buffer for position, another for color, etc..). Packed attributes are similar in concept to a Struct of Arrays (SoA).

Attributes that are stored in an interleaved format are usually stored in a single array. Interleaved attributes are similar in concept an Array of Structs (AoS). Although it is easier to think of vertices as an Array of Structs, it may actually be better for performance to store the attributes of a vertex as a Struct of Arrays especially if not all attributes are accessed in the vertex shader since fewer fetches to global memory are required and fetches can be better coalesced.

Packed versus Interleved arrays. Packed arrays store the vertex attributes in separate buffers while interleaved arrays store the vertex attributes interleaved in a single buffer.

How to specify the input layout of the vertex attributes is described later in the lesson.

Shader Symantics

The vertex shader can output one or more attributes to the next stage of the rendering pipeline but it must at least output a position attribute bound to the SV_Position system value semantic. Semantics are a way to tell the Input Assembler how to link the buffer data supplied by the application to the input parameters expected by the shader. Semantics are also the language syntax used to link output parameters from one shader stage to the input parameters to another shader stage.

In an HLSL shader the semantic name for the variable follows the colon (:) character in a variable declaration.

|

1 2 3 4 5 |

struct VertexPosColor { float3 Position : POSITION; float3 Color : COLOR; }; |

The snippet shows an example of a vertex definition in an HLSL shader. The VertexPosColor structure declares two member variables (vertex attributes):

PositionColor

The Position attribute is bound to the POSITION semantic and the Color attribute is bound to the COLOR semantic. These semantics will be used later to specify how the application data is bound to the vertex attributes in HLSL.

Position and Color attributes could be bound to FOO and BAR semantics but this would not be very descriptive for anyone else trying to read your code. The only requirement is that the input and output semantics match (and the types have to be convertible).Transformation and Spaces

In order to animate the object in the scene, a basic understanding of transformation and spaces must be established. If a refresher on transformation and spaces is needed, please refer to the articles titled Coordinate Systems, Matrices, and Understanding the View Matrix.

For this demo, an object is placed at the origin of the world and rotated. The camera is placed some distance away from the origin in order to view the object. The transformation matrix that rotates the object is called the model matrix and the transformation matrix to place the camera in the scene is called the view matrix. A final transformation called the projection matrix is also applied in order to transform the vertices from view space to clip space. These three matrices can be combined using matrix multiplication to produce a single matrix that can transform the vertices from objects space to clip space. This matrix is appropriately named the model-view-projection matrix or just MVP matrix.

The MVP matrix is required by the vertex shader to perform the transformations on the vertices of the model. This matrix is passed to the vertex shader using a ConstantBuffer object in HLSL.

|

1 2 3 4 5 6 |

struct ModelViewProjection { matrix MVP; }; ConstantBuffer<ModelViewProjection> ModelViewProjectionCB : register(b0); |

ConstantBuffer template construct in order to enable support for descriptor arrays. See Resource Binding in HLSL for more information. In HLSL the matrix type represents a 4×4 matrix (this is an alias of the float4x4 type). The ModelViewProjectionCB shader variable is bound to the b0 register. The b register type is reserved for constant buffer variables (see Shader Register & Register Spaces).

Vertex Shader Output

The vertex shader accepts vertex attributes passed from the application, transforms these inputs in zero or more ways (a vertex shader that performs no transformations on the input data is called a pass-through shader) and outputs the vertex attributes to be consumed by the next stage of the rendering pipeline. Just as the input attributes were bound to semantics, the output attributes are also bound to semantics.

|

1 2 3 4 5 |

struct VertexShaderOutput { float4 Color : COLOR; float4 Position : SV_Position; }; |

The VertexShaderOutput structure groups the vertex shader’s output attributes. The (arbitrarily named) COLOR semantic is used again to pass a Color attribute to the pixel shader stage.

The SV_Position system value semantic is a required semantic to be output by the vertex shader and it is the only required parameter that must be passed to the rasterizer stage. The parameter bound to the SV_Position system value semantic is not a required input parameter for the pixel shader and can be omitted from the pixel shader’s input parameters (as will be shown later). However, the byte offsets of the other parameters (in this case the Color parameter has an offset of 0 bytes within the VertexShaderOutput structure) must match between the outputs and inputs of linked shader stages. For this reason, the Position parameter is placed last in the VertexShaderOutput structure.

The Main Entry Point

The main entry point for the shader is a single function that takes the vertex attributes as input arguments and outputs the transformed vertex attributes.

|

1 2 3 4 5 6 7 8 9 |

VertexShaderOutput main(VertexPosColor IN) { VertexShaderOutput OUT; OUT.Position = mul(ModelViewProjectionCB.MVP, float4(IN.Position, 1.0f)); OUT.Color = float4(IN.Color, 1.0f); return OUT; } |

Unlike in GLSL, the name of the entry point function in HLSL is not required to be “main“. In fact, it is possible to define several entry-point functions in a single HLSL source file. The name of the entry point for the shader is specified when compiling the shader.

Each invocation of the vertex shader operates over a single vertex (as opposed to triangles or the entire mesh) and outputs the transformed vertex. Many vertices are processed in parallel and it is not possible to modify variables defined within the scope of the vertex shader and expect that other invocations of the vertex shader will see those changes (even if you declare the variable as static in the global scope of the vertex shader!). For example, you cannot declare a counter variable in the vertex shader and allow each invocation to increment that counter to see how many vertices were processed (you may be able to achieve this using atomic counters, but that is beyond the scope of this lesson).

On line 24, the vertex position is multiplied by the MVP matrix to produce the clip-space position. The input position must be cast to a float4 using a function-style cast in order to perform the multiplication because the MVP matrix is a 4×4 matrix. A 1.0 is appended to the last component of the position to ensure the matrix translation is taken into consideration.

0.0 was used in the last component of the vector, then the vector would be rotated but not translated.

- If the vertex attribute is a position vector, then the 4th component of the vector must be a 1.

- If the vertex attribute is a normal vector, then the 4th component of the vector must be a 0.

Positional vectors are often referred to as points (because it represents a point in space). Directional vectors are simply referred to as vectors.

On line 25 the input Color attribute is passed to the output Color attribute of the OUT variable. The output color also expects a float4 to be used as output so the function-style cast is used again. In this case, the final component is set to 1.0. The last component in a color value is often referred to as the alpha (α) which in most cases is interpreted as the opacity of the color (but this is not a requirement). Since alpha blending is not used for this example, the final component of the color attribute has absolutely no impact on the final output color of the pixel shader (try setting this value to 0.0 and observe the result).

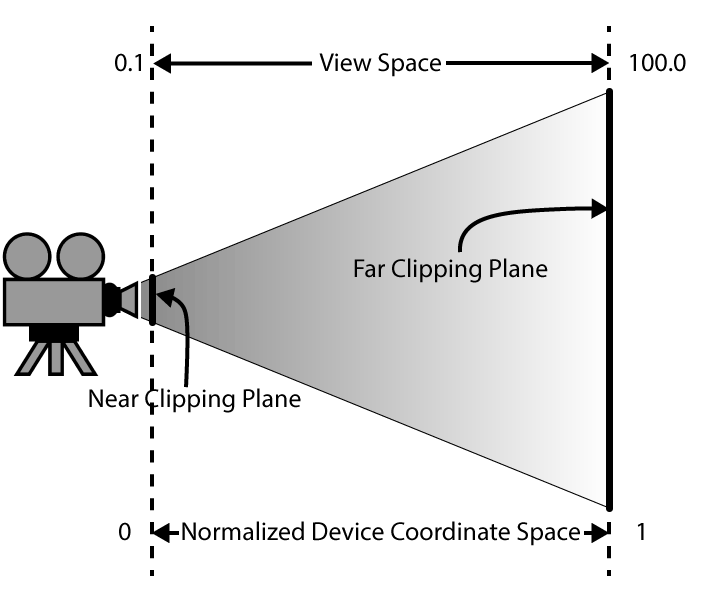

On line 27, the OUT parameter is returned and the result is passed as input to the Rasterizer stage. The rasterizer will use the Position parameter to determine the pixel’s coordinates (in screen space, by applying the viewport), the depth value (in normalized-device coordinate space) which is written to the depth buffer, and the interpolated Color parameter is passed as input to the pixel shader stage.

The entire listing for the vertex shader is shown below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 |

struct ModelViewProjection { matrix MVP; }; ConstantBuffer<ModelViewProjection> ModelViewProjectionCB : register(b0); struct VertexPosColor { float3 Position : POSITION; float3 Color : COLOR; }; struct VertexShaderOutput { float4 Color : COLOR; float4 Position : SV_Position; }; VertexShaderOutput main(VertexPosColor IN) { VertexShaderOutput OUT; OUT.Position = mul(ModelViewProjectionCB.MVP, float4(IN.Position, 1.0f)); OUT.Color = float4(IN.Color, 1.0f); return OUT; } |

Pixel Shader

The purpose of the pixel shader is to produce the final color that should be written to the currently bound render target(s). The pixel shader can write to a maximum of eight color targets and one depth target. For this example, only a single color render target and a single depth target are bound to the output merger stage.

The pixel shader takes the interpolated color value from the Rasterizer stage and outputs that color to the only bound render target using the SV_Target system value semantic.

|

1 2 3 4 5 6 7 8 9 |

struct PixelShaderInput { float4 Color : COLOR; }; float4 main( PixelShaderInput IN ) : SV_Target { return IN.Color; } |

You may notice that the pixel shader is not using the Position attribute that was computed in the vertex shader. Only the Color attribute is used and therefore the Position parameter can be omitted from the PixelShaderInput structure.

The main entry point for the pixel shader is called main (but it is not necessary to call the entry-point function main) and it takes the PixelShaderInput as an input parameter and outputs a single color. This is the simplest form of a pixel shader (actually, no pixel shader would be simpler – it is valid to define a graphics pipeline state without a pixel shader if you only need depth information).

The Tutorial Class

The Tutorial class (actually Tutorial2 since this is the 2nd tutorial in this series) is derived from the Game class described earlier in this lesson. Besides overriding the LoadContent and UnloadContent pure virtual functions from the Game class, the Tutorial2 class also provides a few helper functions in order to upload vertex and index data to the GPU and perform resource transitioning. Resource transitions have been introduced in the previous lesson but uploading resources to the GPU is a new concept that hasn’t been introduced yet. The Tutorial2 class will also load the shaders, define the input layout, create the root signature and pipeline state object.

The Tutorial Header

Before showing any source code, the Tutorial2.h header file is first described in order to provide some background for the functions that are described in more detail in the following sections.

|

1 2 3 4 5 6 |

#pragma once #include <Game.h> #include <Window.h> #include <DirectXMath.h> |

The Tutorial2 class has a dependency on the Game class which is declared in the Game.h header file included on line 3. The Window class is declared in the Window.h header file (not to be confused with the Windows.h header file which contains the Win32 programming APIs).

The DirectXMath.h header file is included in the Window 10 SDK and is considered to be part of the DirectX APIs [7]. This header includes the vector and matrix definitions that are used to define the model, camera, and projection transformation matrices for the demo.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

class Tutorial2 : public Game { public: using super = Game; Tutorial2(const std::wstring& name, int width, int height, bool vSync = false); /** * Load content required for the demo. */ virtual bool LoadContent() override; /** * Unload demo specific content that was loaded in LoadContent. */ virtual void UnloadContent() override; |

The constructor for the Tutorial2 class declared on line 13 is similar to that of the Game class. The name parameter defines the window title for the render window. The width and height parameters define how large the window is when it is created. The vSync option determines if the renderer should synchronize with vertical refresh rate of the screen.

The LoadContent and UnloadContent functions override the pure virtual functions from the Game class that was described previously.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

protected: /** * Update the game logic. */ virtual void OnUpdate(UpdateEventArgs& e) override; /** * Render stuff. */ virtual void OnRender(RenderEventArgs& e) override; /** * Invoked by the registered window when a key is pressed * while the window has focus. */ virtual void OnKeyPressed(KeyEventArgs& e) override; /** * Invoked when the mouse wheel is scrolled while the registered window has focus. */ virtual void OnMouseWheel(MouseWheelEventArgs& e) override; virtual void OnResize(ResizeEventArgs& e) override; |

The OnUpdate method is invoked whenever the game logic needs to be updated and the OnRender method is invoked whenever the game screen needs to be drawn.

The OnKeyPressed and OnMouseWheel events are invoked from the Window class whenever a key is pressed, or the mouse wheel is moved while the game Window has focus.

The OnResize method is called whenever the render window is resized (for example, if the window is toggled between fullscreen states).

The Tutorial2 class also defines a few helper functions.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

private: // Helper functions // Transition a resource void TransitionResource(Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList, Microsoft::WRL::ComPtr<ID3D12Resource> resource, D3D12_RESOURCE_STATES beforeState, D3D12_RESOURCE_STATES afterState); // Clear a render target view. void ClearRTV(Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList, D3D12_CPU_DESCRIPTOR_HANDLE rtv, FLOAT* clearColor); // Clear the depth of a depth-stencil view. void ClearDepth(Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList, D3D12_CPU_DESCRIPTOR_HANDLE dsv, FLOAT depth = 1.0f ); // Create a GPU buffer. void UpdateBufferResource(Microsoft::WRL::ComPtr<ID3D12GraphicsCommandList2> commandList, ID3D12Resource** pDestinationResource, ID3D12Resource** pIntermediateResource, size_t numElements, size_t elementSize, const void* bufferData, D3D12_RESOURCE_FLAGS flags = D3D12_RESOURCE_FLAG_NONE ); // Resize the depth buffer to match the size of the client area. void ResizeDepthBuffer(int width, int height); |

The functionality of the TransitionResource and ClearRTV methods were described in the previous lesson. The ClearDepth method is very similar to the ClearRTV method except it is used to clear a depth-stencil view (DSV) instead of a render target view (RTV).

The UpdateBufferResource method is used to both create a resource large enough to hold the buffer data, but it will also create an intermediate upload buffer that is used to transfer the buffer data from CPU memory into GPU memory. The intermediate upload buffer needs to be resident until the graphics command list is finished uploading that resource to the destination buffer in GPU memory. For that reason, the pointers returned by this function cannot be destroyed until the resource has been fully uploaded to the destination resource.

The ResizeDepthBuffer method is used to resize the depth buffer that is used by the demo. The depth buffer ensures that geometry that appears further away from the viewer is properly occluded by geometry that appears closer to the viewer. The depth buffer is not owned by the Window class (together with the swap chain) because the depth buffer is not a required resource in order to display the final rendered image on the screen.

The depth buffer stores the depth of the pixel in normalized device coordinate space (0 – 1). Depth values far away appear white (1.0) and depth values close to the viewer appear darker (0.0).

The depth buffer stores the depth of the pixel in normalized device coordinate space (NDC). Only pixels that are not occluded based on the current value in the depth buffer are drawn. This ensures that primitives that are further away from the viewer are not drawn on top of primitives that are closer to the viewer.

|

1 |

uint64_t m_FenceValues[Window::BufferCount] = {}; |

In order to perform correct synchronization, the Tutorial2 class needs to track the fence values for each rendered frame. The number of frames that need to be tracked is stored in the Window::BufferCount static constant.

In order to demonstrate a basic rendering pipeline, a cube is rendered using a vertex buffer and index buffer. For each of these resources, a view is created which describes the buffer resources to the rendering pipeline.

|

1 2 3 4 5 6 |

// Vertex buffer for the cube. Microsoft::WRL::ComPtr<ID3D12Resource> m_VertexBuffer; D3D12_VERTEX_BUFFER_VIEW m_VertexBufferView; // Index buffer for the cube. Microsoft::WRL::ComPtr<ID3D12Resource> m_IndexBuffer; D3D12_INDEX_BUFFER_VIEW m_IndexBufferView; |

The VertexBuffer resource is used to store the vertex buffer geometry for the cube and the IndexBuffer resource is used to store the index data to render the cube.

|

1 2 3 4 |

// Depth buffer. Microsoft::WRL::ComPtr<ID3D12Resource> m_DepthBuffer; // Descriptor heap for depth buffer. Microsoft::WRL::ComPtr<ID3D12DescriptorHeap> m_DSVHeap; |

The depth buffer is stored in the m_DepthBuffer member variable. Similar to the render target views for the swap chain, the depth buffer requires a depth-stencil view. The depth-stencil view is created in a descriptor heap.

|

1 2 3 4 5 6 7 8 |

// Root signature Microsoft::WRL::ComPtr<ID3D12RootSignature> m_RootSignature; // Pipeline state object. Microsoft::WRL::ComPtr<ID3D12PipelineState> m_PipelineState; D3D12_VIEWPORT m_Viewport; D3D12_RECT m_ScissorRect; |

The root signature describes the parameters passed to the various stages of the rendering pipeline and the pipeline state object describes the rendering pipeline itself.

The viewport and scissor rect variables are used to initialize the rasterizer stage of the rendering pipeline.

|

1 2 3 4 5 6 7 8 |

float m_FoV; DirectX::XMMATRIX m_ModelMatrix; DirectX::XMMATRIX m_ViewMatrix; DirectX::XMMATRIX m_ProjectionMatrix; bool m_ContentLoaded; }; |

The m_FoV variable is used to store the current field of view of the camera. For this demo, the middle mouse wheel is used to “zoom-in” to the cube in the center of the scene.

The model, view and projection matrices are used to compute the MVP matrix that is used in the vertex shader to rotate the cube, position the camera, and project the vertices into clip-space.

The m_ContentLoaded is used to indicate when the game content has been loaded.

The implementation details for the Tutorial2 class is described next.

Tutorial2 Preamble

The preamble for the Tutorial2 source file contains the required header files for the class.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

#include <Tutorial2.h> #include <Application.h> #include <CommandQueue.h> #include <Helpers.h> #include <Window.h> #include <wrl.h> using namespace Microsoft::WRL; #include <d3dx12.h> #include <d3dcompiler.h> #include <algorithm> // For std::min and std::max. #if defined(min) #undef min #endif #if defined(max) #undef max #endif using namespace DirectX; |

The Tutorial2.h header file included on line 1 is described in the previous section.

The Application.h header file included on line 3 contains the definition of the Application class. The Application class was briefly described earlier in this post but not described in full details. The Application class is used to create Window instances for displaying the rendered image.

The CommandQueue.h header file contains the definition of the CommandQueue class that was described in detail in the section titled Command Queue.

The Helpers.h header file contains helper functions and macros that are used throughout the demo source code.

The Window.h header file contains the Window class definition (not to be mistaken with the Windows.h WIN32 API header file).

The wrl.h header file contains the Windows Runtime C++ Template Library. It is required for the ComPtr template class.

The d3dx12.h header file included on line 11 is not considered to be part of the standard DirectX 12 API and must be downloaded separately from the Microsoft GitHub page (https://github.com/Microsoft/DirectX-Graphics-Samples/blob/master/Libraries/D3DX12/d3dx12.h).

The d3dcompiler.h header file contains functions that are required to load (precompiled) shaders from disc.

D3DCompiler functions, do not forget to link against the D3Dcompiler_47.lib library and copy the D3dcompiler_47.dll to the same folder as the binary executable when distributing your project.

A redistributable version of the D3dcompiler_47.dll file can be found in the Windows 10 SDK installation folder at

For more information, refer to the MSDN blog post at: https://blogs.msdn.microsoft.com/chuckw/2012/05/07/hlsl-fxc-and-d3dcompile/

The algorithm STL header file included on line 14 contains the std::min and std::max functions.

In order to disambiguate the min and max global macros defined in some Windows header file somewhere, the min and max macros are undefined on lines 15-21.

In order to avoid polluting the global namespace, all of the DirectX Math types are declared in the DirectX namespace. To avoid having to type “DirectX::” for all DirectX math types, the DirectX namespace is imported into the current source file on line 23.

Starting with the C++17 standard, the algorithm header file also contains the clamp function. Until C++17 standard is fully implemented in Visual Studio, an implementation of the clamp function will need to be provided manually 😭.

|

1 2 3 4 5 6 |

// Clamp a value between a min and max range. template<typename T> constexpr const T& clamp(const T& val, const T& min, const T& max) { return val < min ? min : val > max ? max : val; } |

For this lesson, each vertex will have only 2 attributes:

- Position

- Color

Both the Position and Color vertex attributes are stored as 3-component 32-bit floating-point vectors. Vertices are stored using an interleaved storage format (described previously).

|

1 2 3 4 5 6 |

// Vertex data for a colored cube. struct VertexPosColor { XMFLOAT3 Position; XMFLOAT3 Color; }; |

The VertexPosColor structure is used to group the vertex attributes to be passed to the vertex shader.

Try to convert this demo to using packed vertex formats instead of interleaved vertex formats. Try to draw the mesh 1,000 or 10,000 times. Do you notice a performance difference? Is it better or worse than using interleaved vertex formats?

Next, the vertex data for the cube mesh is defined.

|

1 2 3 4 5 6 7 8 9 10 |

static VertexPosColor g_Vertices[8] = { { XMFLOAT3(-1.0f, -1.0f, -1.0f), XMFLOAT3(0.0f, 0.0f, 0.0f) }, // 0 { XMFLOAT3(-1.0f, 1.0f, -1.0f), XMFLOAT3(0.0f, 1.0f, 0.0f) }, // 1 { XMFLOAT3( 1.0f, 1.0f, -1.0f), XMFLOAT3(1.0f, 1.0f, 0.0f) }, // 2 { XMFLOAT3( 1.0f, -1.0f, -1.0f), XMFLOAT3(1.0f, 0.0f, 0.0f) }, // 3 { XMFLOAT3(-1.0f, -1.0f, 1.0f), XMFLOAT3(0.0f, 0.0f, 1.0f) }, // 4 { XMFLOAT3(-1.0f, 1.0f, 1.0f), XMFLOAT3(0.0f, 1.0f, 1.0f) }, // 5 { XMFLOAT3( 1.0f, 1.0f, 1.0f), XMFLOAT3(1.0f, 1.0f, 1.0f) }, // 6 { XMFLOAT3( 1.0f, -1.0f, 1.0f), XMFLOAT3(1.0f, 0.0f, 1.0f) } // 7 }; |

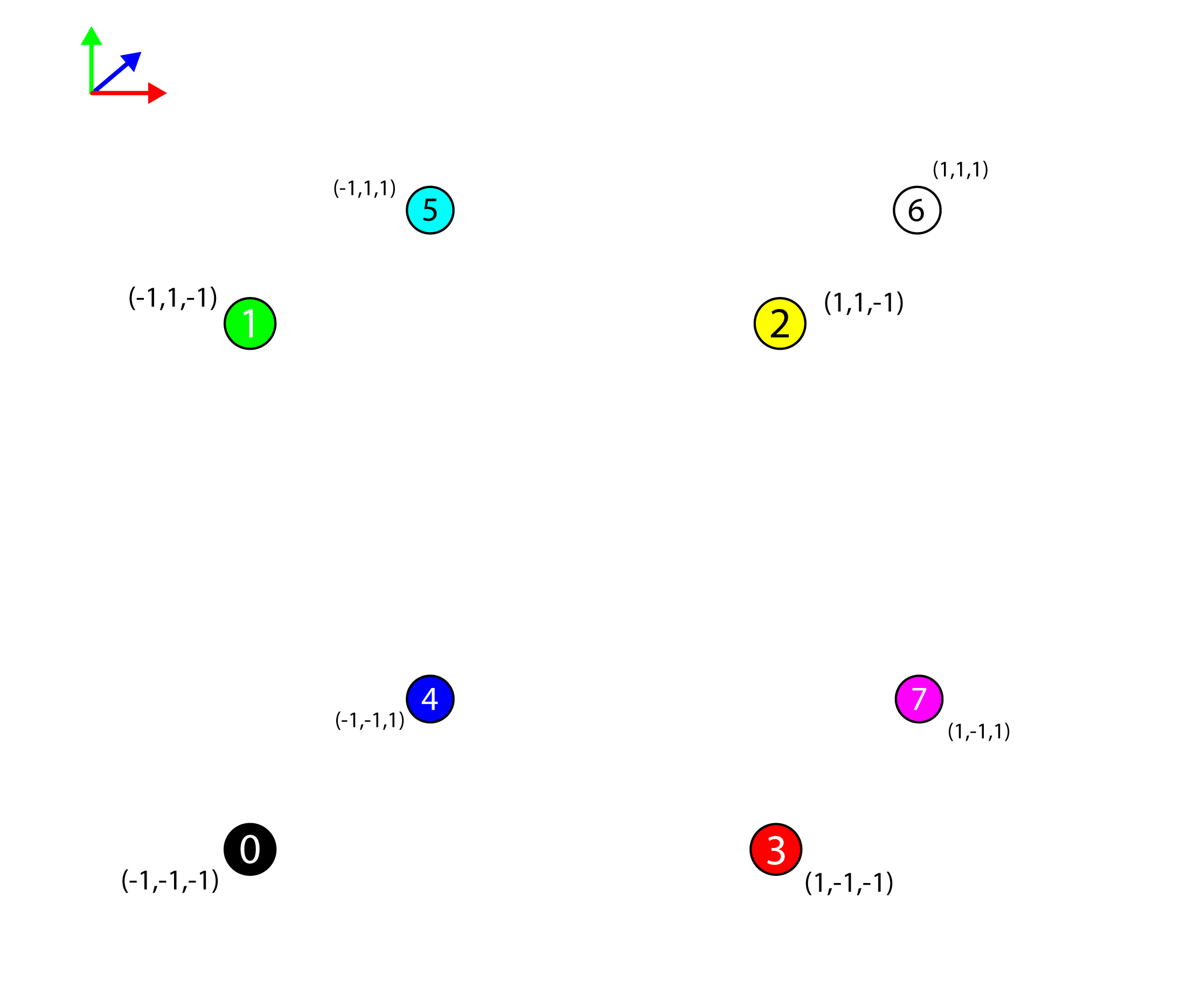

The g_Vertices static arrays defines the unique vertices that make up the cube. The image below represents the various vertices for the mesh.

The vertices by themselves are not sufficient to represent a solid cube mesh in 3D space. The Input Assembler stage of the rendering pipeline can render a set of one or more points, but points alone cannot be used to generate solid geometry.

The Input Assembler stage of the rendering pipeline understands the following primitive types [8]:

The D3D_PRIMITIVE_TOPOLOGY enumeration specifies how the Input Assembler stage interprets the vertex data that is bound to the rendering pipeline.

D3D_PRIMITIVE_TOPOLOGY_POINTLIST: A disconnected list of points.D3D_PRIMITIVE_TOPOLOGY_LINELIST: A list of disconnected lines.D3D_PRIMITIVE_TOPOLOGY_LINESTRIP: A list of connected lines.D3D_PRIMITIVE_TOPOLOGY_TRIANGLELIST: A list of disconnected triangles.D3D_PRIMITIVE_TOPOLOGY_TRIANGLESTRIP: A list of connected triangles. Vertex \(n\) and vertex \(n-2, \forall n>1\) are implicitly connected to form the triangles.D3D_PRIMITIVE_TOPOLOGY_LINELIST_ADJ: A list of disconnected lines. Additional adjacency information is available to the Geometry shader stage.D3D_PRIMITIVE_TOPOLOGY_LINESTRIP_ADJ: A list of connected lines. Additional adjacency information is available to the Geometry shader stage.D3D_PRIMITIVE_TOPOLOGY_TRIANGLELIST_ADJ: A list of disconnected triangles. Additional adjacency information is available to the Geometry shader stage.D3D_PRIMITIVE_TOPOLOGY_TRIANGRIP_ADJ: A list of connected triangles. Additional adjacency information is available to the Geometry shader stage.

Points and lines cannot be used to generate a solid mesh (where the faces of the mesh are shaded in). Only triangles (and patches) can be used to generate a shaded mesh. For this example, the mesh is going to be generated from a list of triangles. In order to tell the Input Assembler stage how to generate the triangles for the mesh, an index buffer is used.

|

1 2 3 4 5 6 7 8 9 |

static WORD g_Indicies[36] = { 0, 1, 2, 0, 2, 3, 4, 6, 5, 4, 7, 6, 4, 5, 1, 4, 1, 0, 3, 2, 6, 3, 6, 7, 1, 5, 6, 1, 6, 2, 4, 0, 3, 4, 3, 7 }; |

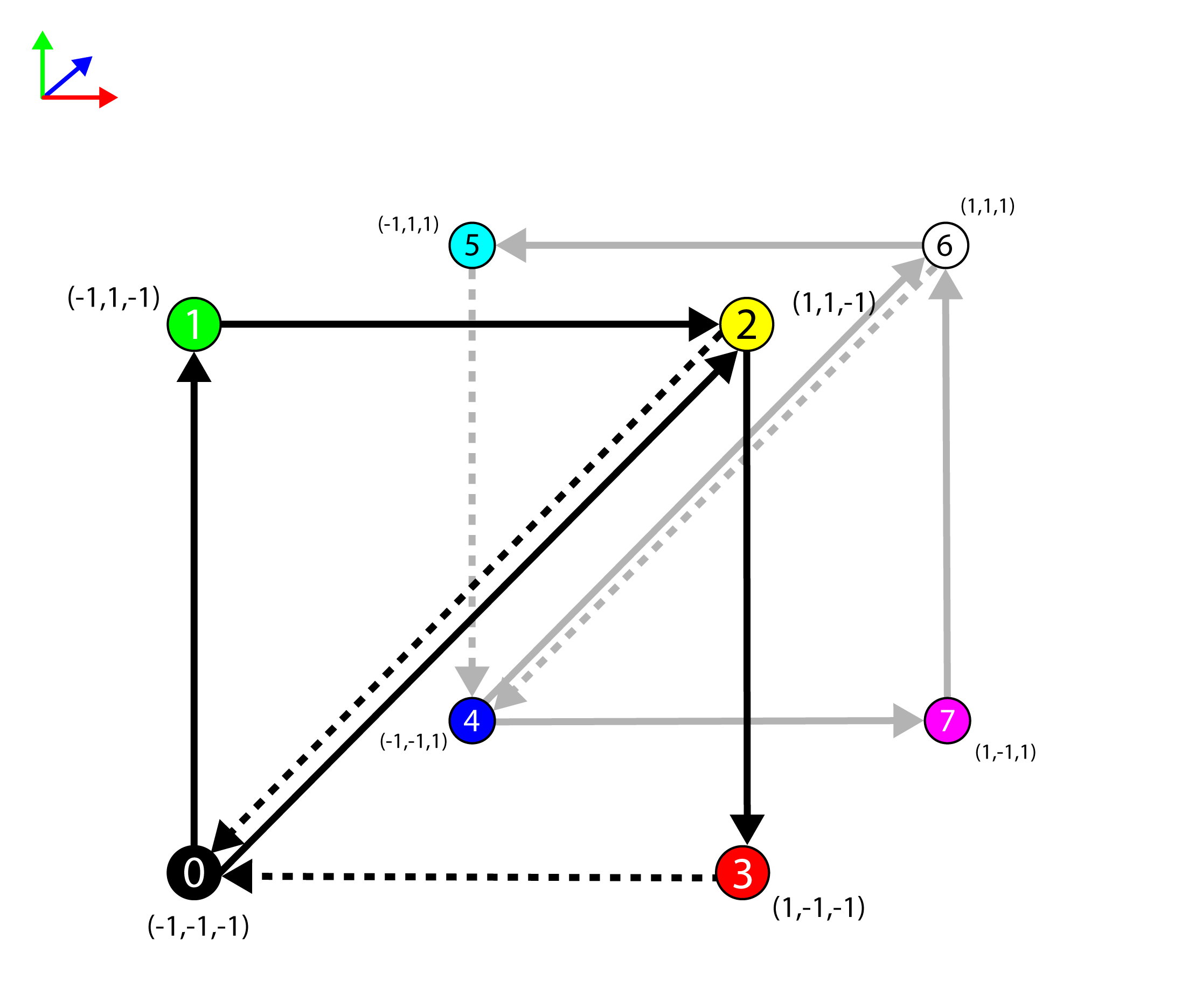

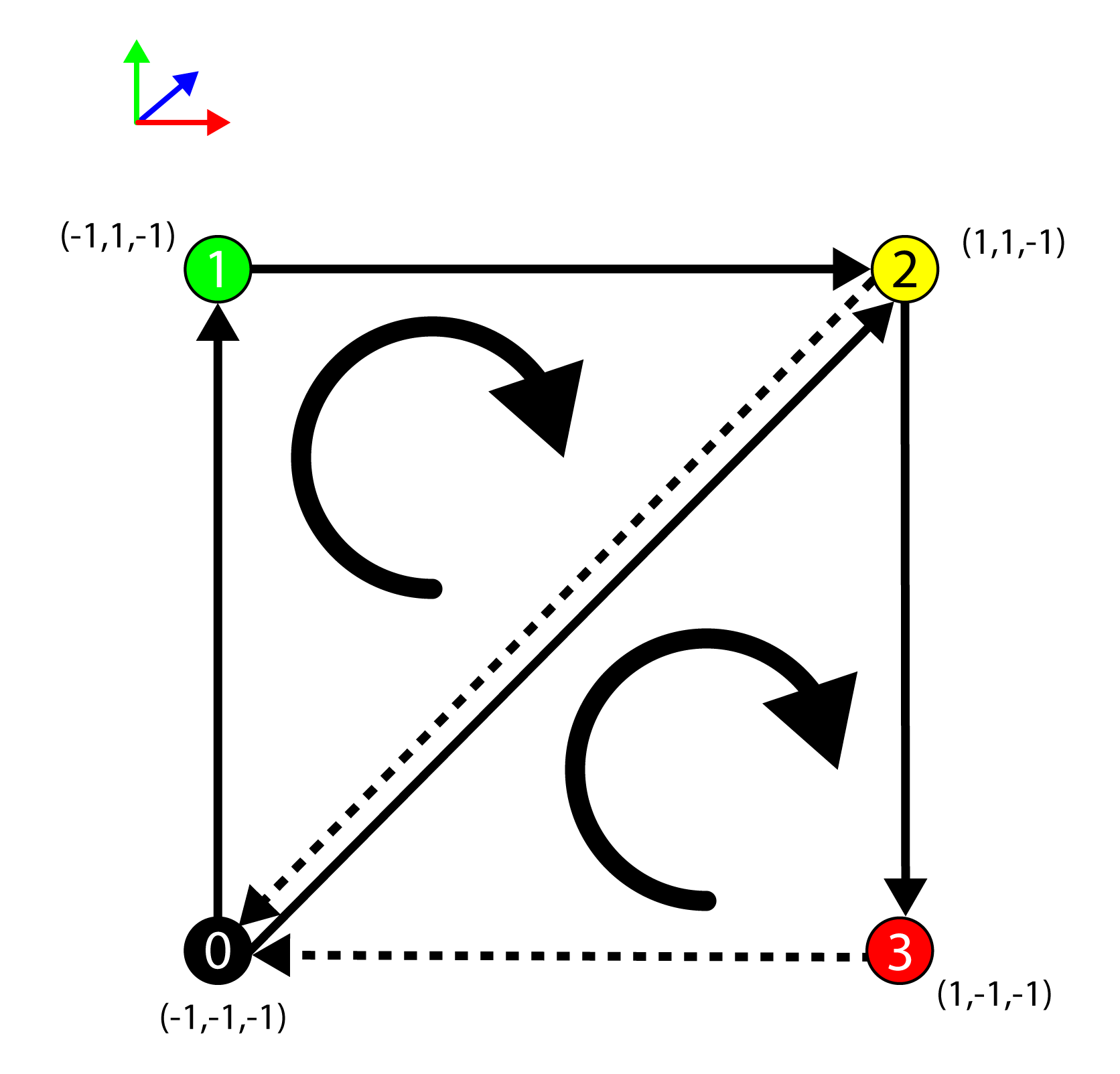

The g_Indicies array defines the index buffer that is used to represent the triangles that are used to create the solid cube. The values in the index buffer represent the index of the vertex in the vertex buffer (the g_Vertices array). The cube consists of 6 faces. Each face is composed of 2 triangles. The g_Indicies array contains 36 indices (3 indices per triangle). The image below shows only the first two faces of the cube.

Only the front and back sides of the cube are shown in the image above (the top, bottom, left, and right sides are excluded from the image to keep it more clear). The edges of the back facing triangles are shown in a light gray while the edges of the front facing triangles are shown in black. A front-facing polygon is said to be facing the viewer while a back-facing polygon is said to be facing away from the viewer.

Since back-facing polygons are generally not visible to the viewer (assuming the mesh is fully convex and the material is not transparent) then it is not efficient to shade the pixels that will not be visible in the final render. As an optimization, it is possible to tell the Rasterizer stage to cull (remove from the rendering pipeline) back-facing polygons.

The winding-order of the triangle is used to determine if it is front-facing or back-facing. The winding order is determined by the direction of the normal of the triangle (in projected clip-space). The normal for the triangle is based on the cross-product of two edges of the triangle. Winding order can be either clockwise or counter-clockwise. The default winding order for front-facing polygons in DirectX is clockwise (so back-facing polygons are counter-clockwise).

To determine if a triangle is front-facing, the direction of the normal is computed by taking the cross product of the edges of the triangle (in projected clip-space). The normal \(\mathbf{n}\) of the triangle formed by the vertices \(\mathbf{a}\), \(\mathbf{b}\), and \(\mathbf{c}\) is:

\[

\begin{array}{rcl}

\mathbf{u} & = & \mathbf{b}-\mathbf{a} \\

\mathbf{v} & = & \mathbf{c}-\mathbf{a} \\

\mathbf{n} & = & \mathbf{u}\times\mathbf{v} \\

\end{array}

\]

In projected clip space, the \(z\) component of the resulting normal is negative if it is facing the viewer. Since it is sufficient to know if the direction of the \(z\) component of the normal is positive or negative, the equation can be reduced to:

\[

\mathbf{n}_z=(\mathbf{u}_x\mathbf{v}_y)-(\mathbf{u}_y\mathbf{v}_x)

\]

The triangle is front-facing if \(\mathbf{n}_z\lt 0\) and back-facing otherwise.

Tutorial2::Tutorial2

The constructor for the Tutorial2 class simply passes the initialization variables to the parent class (Game). It also initializes the scissor, viewport and field of view (fov) variables.

|

1 2 3 4 5 6 7 8 |

Tutorial2::Tutorial2( const std::wstring& name, int width, int height, bool vSync ) : super(name, width, height, vSync) , m_ScissorRect(CD3DX12_RECT(0, 0, LONG_MAX, LONG_MAX)) , m_Viewport(CD3DX12_VIEWPORT(0.0f, 0.0f, static_cast<float>(width), static_cast<float>(height))) , m_FoV(45.0) , m_ContentLoaded(false) { } |